Build software users love. Our software quality assurance services ensure every release exceeds expectations.

Get guaranteed results and maximise business value

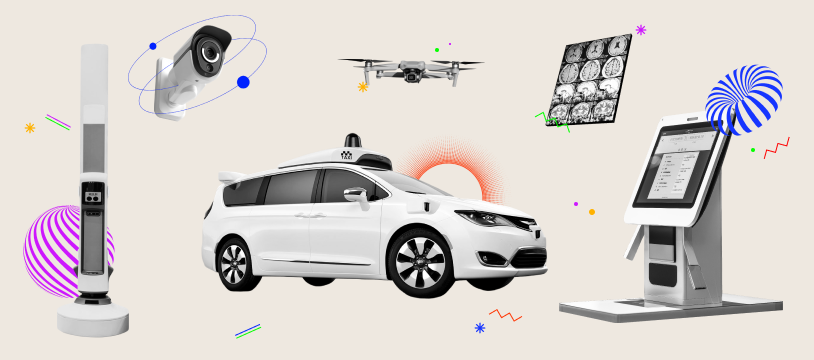

Robotics is the new black in the world of digital transformation.Quality Assurance for Healthcare

Our team rigorously tests healthcare software to ensure data security, regulatory compliance, and patient confidentiality.

We prioritize the reliability and accuracy of healthcare systems.

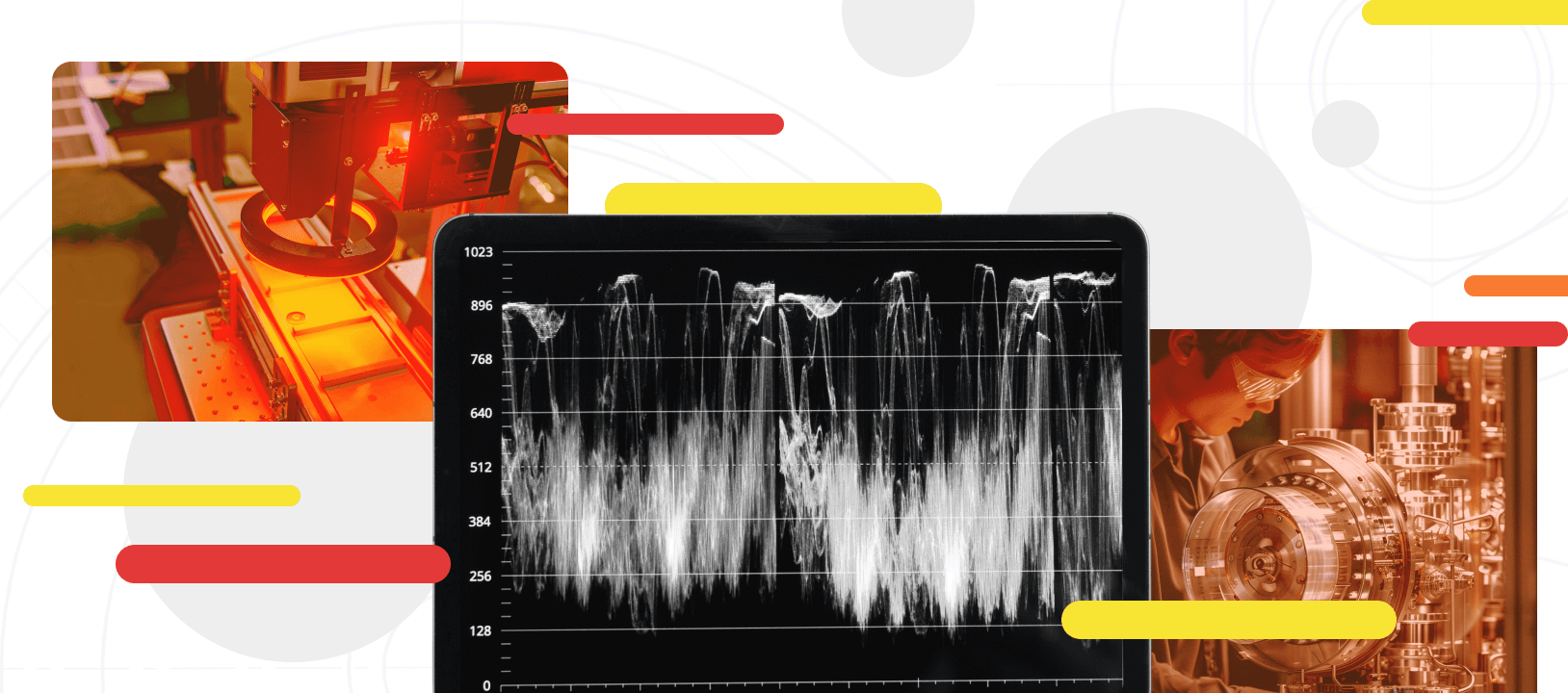

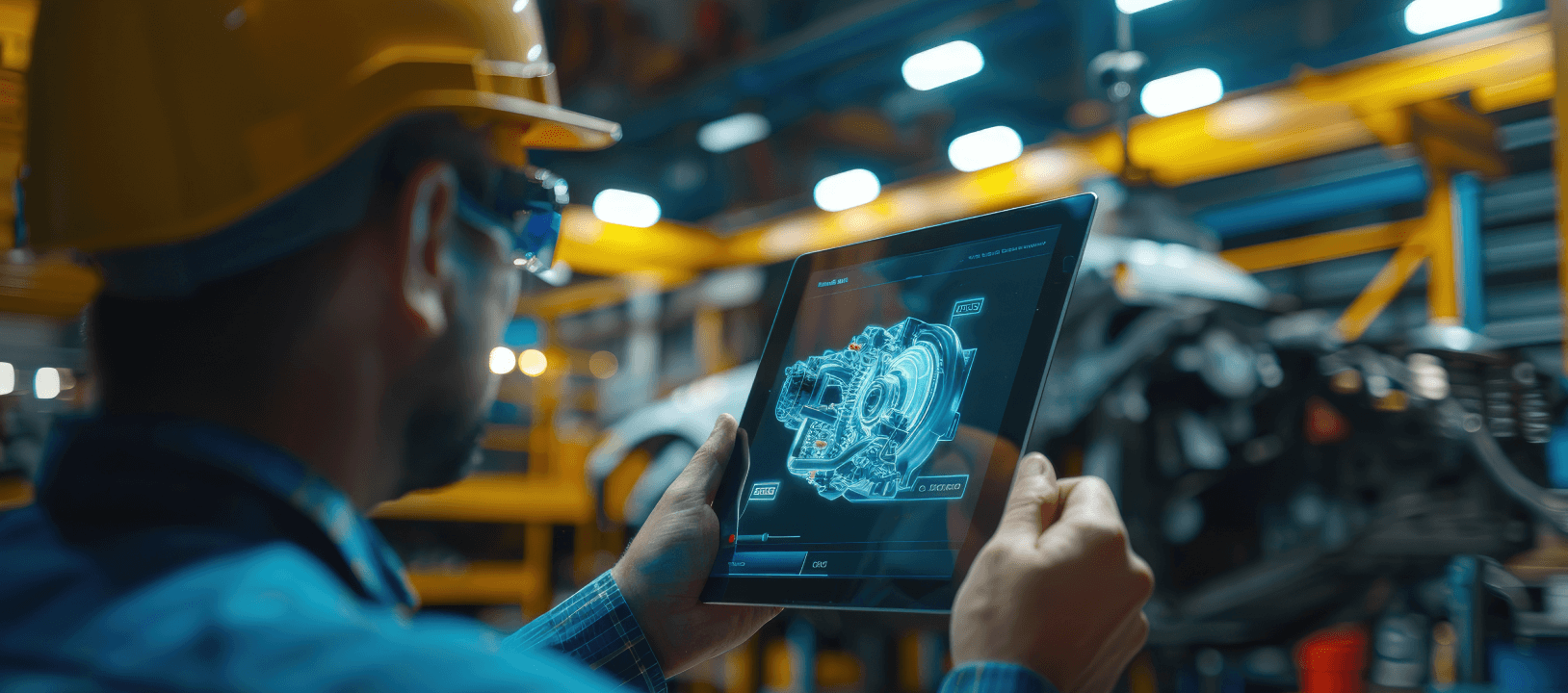

Quality Assurance for Manufacturing

When assuring the quality of software for the manufacturing industry, our focus is on optimizing processes, reducing defects, and enhancing software reliability.

We ensure that manufacturing software operates seamlessly to improve efficiency.

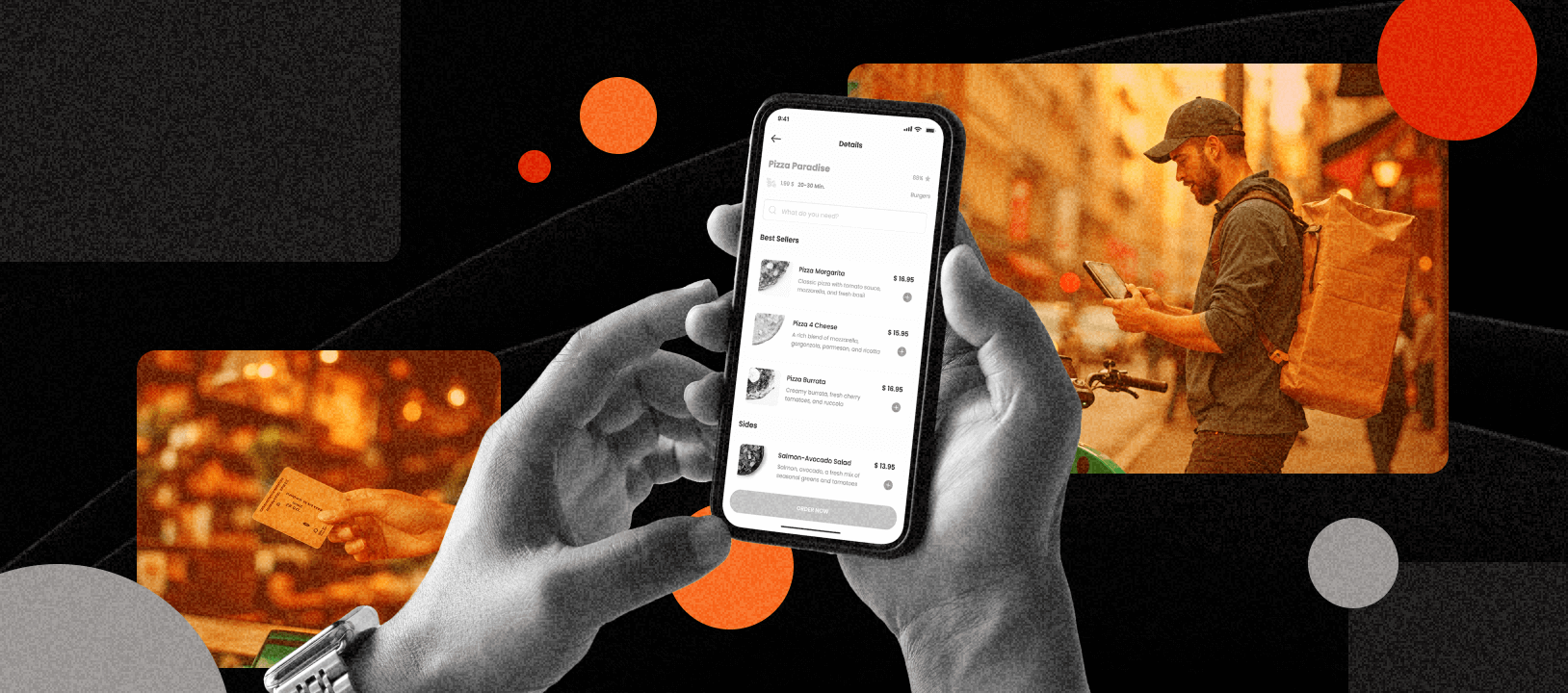

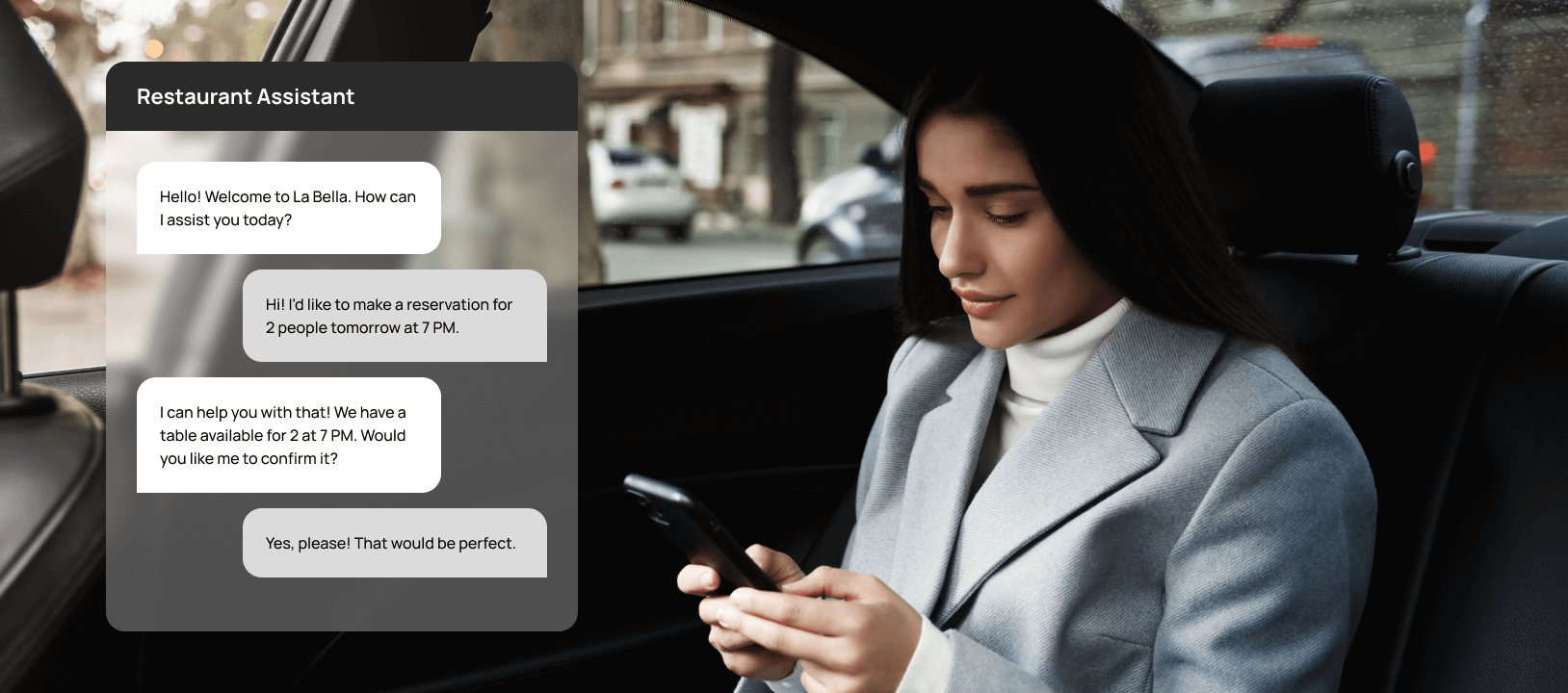

Quality Assurance for Restaurants

We concentrate on usability, reliability, and performance when testing restaurant software.

Our aim is to deliver a seamless dining experience for customers while streamlining operations for restaurant owners.

Quality Assurance for Finance

Our meticulous testing in the finance sector centers on data accuracy, fraud prevention, and regulatory compliance.

We prioritize the financial well-being of our clients and their customers, ensuring trust and security in financial services.

QA for Healthcare

Our team rigorously tests healthcare software to ensure data security, regulatory compliance, and patient confidentiality.

We prioritize the reliability and accuracy of healthcare systems.

QA for Manufacturing

When assuring the quality of software for the manufacturing industry, our focus is on optimizing processes, reducing defects, and enhancing software reliability.

We ensure that manufacturing software operates seamlessly to improve efficiency.

QA for Restaurants

We concentrate on usability, reliability, and performance when testing restaurant software.

Our aim is to deliver a seamless dining experience for customers while streamlining operations for restaurant owners.

QA for Finance

Our meticulous testing in the finance sector centers on data accuracy, fraud prevention, and regulatory compliance.

We prioritize the financial well-being of our clients and their customers, ensuring trust and security in financial services.

Frequently Asked Questions

What is your approach to testing, and how do you use test automation?

We usually recommend test automation for critical and high-risk flows, such as regression testing, but the scope of automation is agreed with the client. Some teams invest in broader automated test suites, while others focus more on manual testing with a smaller set of automated checks. Our goal is to strike a practical balance between quality, speed, cost, and risk rather than apply a one-size-fits-all testing model.

How do you assess and address QA gaps when a project starts?

After onboarding, most new and updated test cases are created alongside new features and releases, as well as when high-risk areas are changed. The level of formal gap analysis and automation is agreed with a client based on risk and budget. This helps us avoid over-engineering while keeping the most important parts of the system well protected.

How does AI support your code review process in real projects?

When we join an existing project, these tools help us understand the codebase more quickly by summarizing modules and mapping dependencies. This speeds up onboarding, improves early security and quality checks, and results in more consistent, reliable code. All final decisions remain with our engineers.

What QA metrics do you track?

In addition, we look at how quickly critical defects are fixed, how often issues are reopened, and the trend of production incidents after releases.

We agree on the exact metrics and level of reporting with the client. If needed, we can also report more formal metrics such as defect density or test coverage.

How do you assess the impact of a new or modified requirement on cost, time, and quality?

- Make sure we fully understand the change, why it’s requested, and what will stay the same.

- Review the affected modules, integrations, and data structures to assess their complexity.

- Estimate the extra effort needed for development, testing, and rework, and how it will impact the plan and budget.

- Identify critical flows that will be affected, any additional testing needed, production risks, and required refactoring.

- Present the impact in simple terms and agree on the next steps with the client.

We keep track of all approved changes in the shared backlog. Updated estimates and priorities show how costs, time, and quality will be affected.

Our experts

We are experts in software and hardware engineering. By using and combining cutting edge technologies, we create unique solutions that transform industries.

Sergey

Lead Software QA Engineer

I currently hold the position of Lead Test Quality Assurance Engineer, specializing in e-Learning projects. My key responsibility is to define testing strategies to make sure the end product meets the industry’s and company’s standards.

As a QA team manager, I create testing plans, run team meetings to brainstorm solutions to problems, and ensure the work is done on time and quality. My job also involves constant communication with the development team and the customer to communicate the needs and requirements of all participants of the project.

Anna

Senior engineer QA-A

For the past seven years, I have been delivering comprehensive quality assurance across healthcare, finance, e-learning, and AI-driven restaurant management systems, ensuring robust software reliability through strategic testing methodologies.

I have been leading automated testing initiatives using Java, Selenium WebDriver, and REST Assured, while integrating quality processes into CI/CD pipelines. I am directing QA teams through complex project lifecycles, mentoring team members, and collaborating with cross-functional Agile teams to deliver high-quality software solutions.

I have extensive expertise in system evaluation, test design, and end-user support, and consistently optimize testing processes across web, mobile, and API platforms.